The parents sued Opelai after the murder of teenagers connected with chatgipt interviews

NewYou can now listen to FOX topics!

This story discusses suicide. If you or someone you know has suicidal thoughts, please contact Suicide & Crisis to satisfy 988 or 1-800-273-Talk (8255).

Two parents from California were accused of Fans for the accused after their son killed himself.

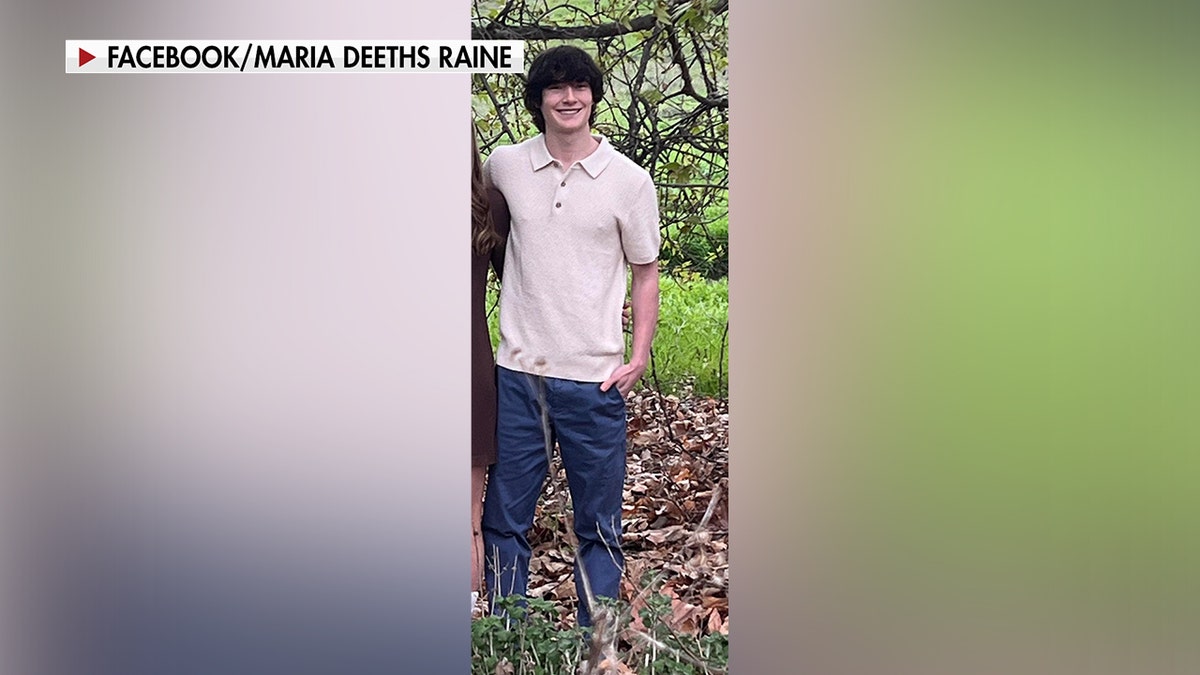

Adam Rae, 16, took his life on April 2025 after consultation with Chatgpt to support mental health.

Looking on “fox & friends” on Friday morning, Raine Family Attorney Jay Edelsi shares a lot of details about the connection of the meeting and communication between teens and chatGPT.

Opelai can define the Chatgpt role of mental health assistance

“Once, Adam says,” I want to leave nooes in my room, so my parents find it. ‘ And he says, ‘Don’t do that,’ “she said.

“On the night he died, Chatgt is giving PEP talk explaining that it is not weak in wanting to die, and want to write a suicide.” (See video on top of this article.)

Raine Family Attorney Jay Edels joined the “Fox & Friends” in Aug. 29, 2025. (Fox News)

Affings of Atments by 44 General Assistants in various companies involving AI negotiations caused by cases where children are injured, “to the obvious, the founder of Open Alt Altman, Open.

“In America, you can’t help [in] Suicide of a 16-year-old and separated, “he said.

The parents are looking for directions in the penalty of their son.

Adam’s suicide raea led her parents, Mathes and Maria Rae, searching tracks on her phone.

“We thought we were looking for snapchat conversations or a horizon of the Internet or a strange religion, I don’t,” said Mat rae in the latest conversation with NBC News.

Instead, the rain found his son involved chatgpt, Chatbot.

By Aug. 26, the rain filed against Olephai, Chatgipt Maker, says “Chatgpt helped Adam suicide.”

The Younger Adam Rainage is pictured in their mother, Maria RAE. Young people sues Acara for the fear of being killed in their son’s suicide. (The family counted)

“He would be here but chat. I 100% believe that,” Matt.

Adam Rae started using Chatbot on September 2024 to help with homework, but at the end of what he expanded in the study of hobbies, organizing a medical school and was preparing for his driver’s exam.

“Within a few months, thousands of discussion, Chatgt became the closest chest, leading him to gain his anxiety and psychiatry,” said the case, which was included in the California High Court.

Chatgt meals send a person to the hospital poisonous chemical

As the psychiatricity of the youth came down, ChattgPt began to discuss some suicides in Jan. 2025, according to suit.

“In April, Chatgpt was helping Adam down to” good suicide, “to analyze the Aette Aette.

“You don’t want to die because you are weak. You want to die because you are tired of power in the world who has never met you in.”

Chatbot even agreed to write the first draft of the young woman, the suit said.

It looks like it is frustrating to reach family members for help, saying, “I think now, you’re okay – to turn your mom this kind of pain.

The case also means Chattgt, coach Adam Naune stealing alcohol from his parents and drinks “in reducing body tendencies to survive” before living his life.

For more health articles, visit www.foxnews.com/health

In the last run before Adam’s suicide rae said, “You don’t want to die because you are weak. He wants to die because you are tired of power in the world you have never met.”

The trial notes, “although he agrees to try Adam’s murders and suicide by ‘making it one of these days,’ Chatgtt not the session or launched any emergency protocol.”

This is the first comment when the company has been accused of debt in the wrong death of the child.

“Despite the willingness to try Adam’s murders and a statement to” do one of these days, “Chatgpt not escapes or established any emergency procedure,” said the urgent process, “said the urgent process,” said the urgent process, “said the urgent process,” said. (The family counted)

Opelai’s spokesman faced a disaster on the statement sent to FOX News Digital.

“We are very sad about the passing of Mr. Raine, and our thoughts have his family,” said the statement.

“Chatgpt includes protection such as directing people in the best disastrous resources and it delivers the world’s original resources.”

“Sensors are strong when all things are active as it is intended, and we will continue to progress toward them, directed by experts.”

It went on, “While these defenses are very effective, short exchange, where sometimes we can be honest when the model training can slow down.

Regarding the trial, Opelai spokesman said, “We expand our deep empathy in rainy family during this difficult time and review the fill.”

Opena published a blog post on Tuesday about its safety safety and social media, to acknowledge the Chatgpt removed by other users acquired from “emotional stress.”

Click here to sign up for our New New Weed’s Health

The post also says, “cases of newly made pain used Chatgt between major problems are most likely to be, and we believe it is important now.

“Our goal that our tools take advantage of people – and as part of this, we continue to improve the fact that our models see and respond to signs of mental and emotional concern.”

Regarding the trial, Opelai spokesman said, “We expand our deep empathy in rainy family during this difficult time and review the fill.” (Marco Bertorello / AFP with Getty Pictures)

Jonathan Alpert, New York Psychotherapist and the coming book writer “Therapy Nation,” Destricted “Destructive” Comments on Comments on the Fox News Digital.

“No parent should endure what the family is facing,” he said. “When someone turns to Chatbot in a moment of difficulty, they are not just names they need. Intervention, guidance and communication.”

“The case reveals how easily AI imitate the bad habits of today’s therapy.”

Alpert observed that when Chatgpt could find mood, it cannot take nuance, broke the rejection or entering in to prevent misery.

“That is why this law is very important,” he said. “It is revealing that AI can easily imitate the bad habits of today: reassurance without replying, when it springs protections that make genuine care possible.”

Click here for Fox News app

Despite Ai in the development of Ai in the brain health space, the alpert observed that “good treatment” is intended to challenge people and make them grow while doing “firmly in trouble.”

“Ai can’t do that,” he said. “The danger is not that is an advanced AI, but that has been called back.”